PuppetGAN: Cross-Domain Image Manipulation by Demonstration

ICCV'19 Oral

Abstract

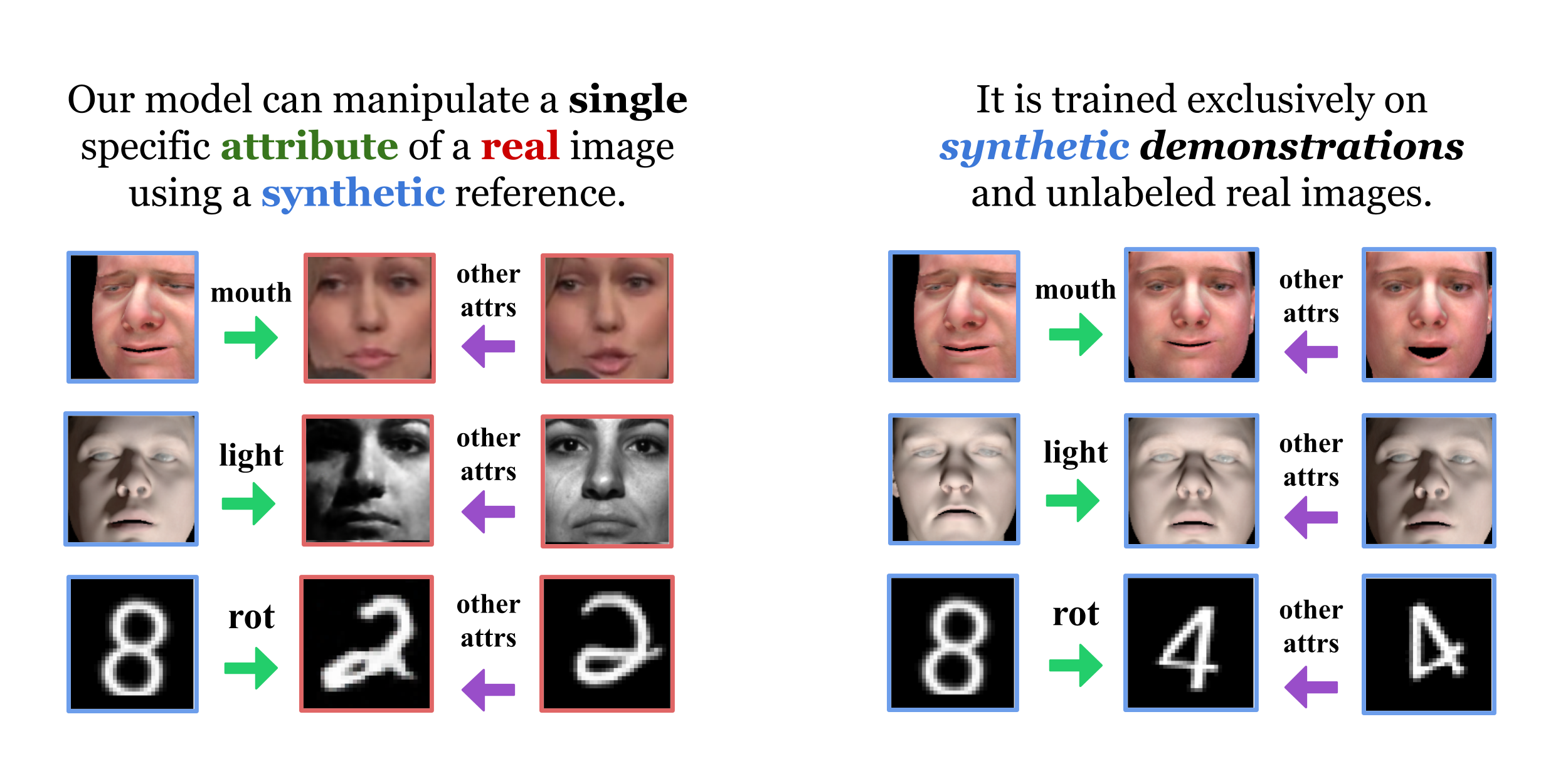

In this work we propose a model that can manipulate individual visual attributes of objects in a real scene using examples of how respective attribute manipulations affect the output of a simulation. As an example, we train our model to manipulate the expression of a human face using nonphotorealistic 3D renders of a face with varied expression. Our model manages to preserve all other visual attributes of a real face, such as head orientation, even though this and other attributes are not labeled in either real or synthetic domain. Since our model learns to manipulate a specific property in isolation using only "synthetic demonstrations" of such manipulations without explicitly provided labels, it can be applied to shape, texture, lighting, and other properties that are difficult to measure or represent as real-valued vectors. We measure the degree to which our model preserves other attributes of a real image when a single specific attribute is manipulated. We use digit datasets to analyze how discrepancy in attribute distributions affects the performance of our model, and demonstrate results in a far more difficult setting: learning to manipulate real human faces using nonphotorealistic 3D renders.

Data and Code

An unofficial implementation by @GiorgosKarantonis is avaliable on github.

To get the data, please write an email to usmn[at]bu[dot]edu indicating your university affiliation and a web homepage hosted on the university domain.

Reference

If you find this useful in your work please consider citing:

@inproceedings{usman2019puppetgan,

title={Puppet{GAN}: Cross-domain image manipulation by demonstration},

author={Usman, Ben and Dufour, Nick and Saenko, Kate and Bregler, Chris},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={9450--9458},

year={2019}

}