RIFT: Disentangled Unsupervised Image Translation via Restricted Information Flow

Ben Usman* Dina Bashkirova* Kate Saenko

Boston University

In WACV 2023

Paper | Supplementary | Code | Poster | Video

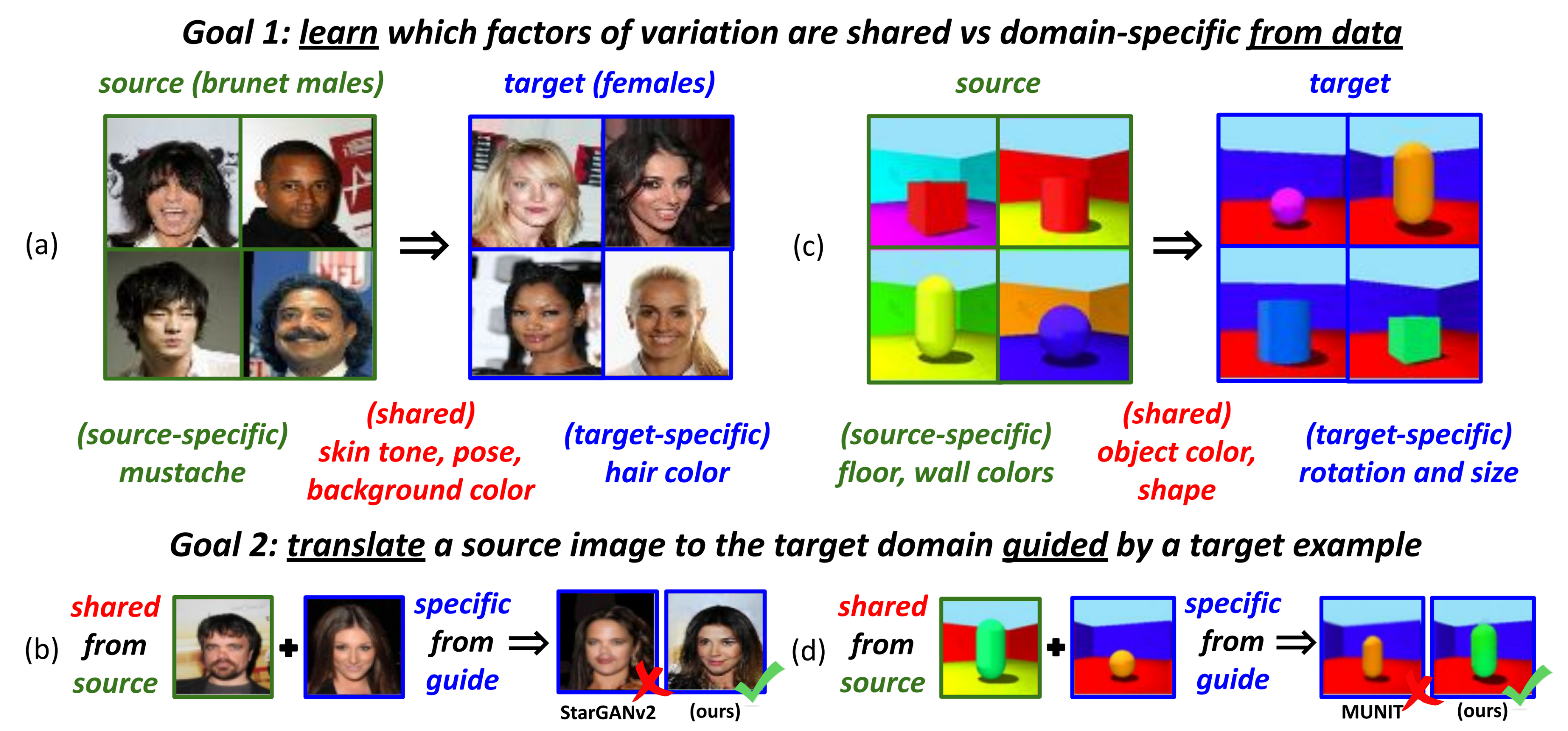

We propose a new many-to-many image translation method that infers which attributes are domain-specific from data by constraining information flow through the network using translation honesty losses and a penalty on the capacity of the domain-specific embedding, and does not rely on hard-coded inductive architectural biases.

Abstract

Unsupervised image-to-image translation methods aim to map images from one domain into plausible examples from another domain while preserving the structure shared across two domains. In the many-to-many setting, an additional guidance example from the target domain is used to determine the domain-specific factors of variation of the generated image. In the absence of attribute annotations, methods have to infer which factors of variation are specific to each domain from data during training. In this paper, we show that many state-of-the-art architectures implicitly treat textures and colors as always being domain-specific, and thus fail when they are not. We propose a new method called RIFT that does not rely on such inductive architectural biases and instead infers which attributes are domain-specific vs shared directly from data. As a result, RIFT achieves consistently high cross-domain manipulation accuracy across multiple datasets spanning a wide variety of domain-specific and shared factors of variation.

Citation

@inproceedings{usman2023rift,

author = {Usman, Ben and Bashkirova, Dina and Saenko, Kate},

title = {{RIFT}: Disentangled Unsupervised Image Translation via Restricted Information Flow},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2023}

}