Visual Domain Adaptation Challenge

[News] [Overview] [Winners] [Browse Data] [Download] [Evaluation] [Rules] [FAQ] [TASK-CV Workshop] [Organizers] [Sponsors]News

The 2018 VisDA challenge is live and open for registration! It features a larger, more diverse dataset and two new competition tracks--open-set object classification and object detection. Vist the challenge website for more details and to register! In the meantime, the 2017 test server is still up and running if you wish to submit to it.

- November 1 TASK-CV workshop presentations available.

- October 10 Winners announcement!

- September 30 Thank you to all who participated in the challenge! Stay tuned for the winners announcement.

- September 10 Prizes announced! See here for details.

- September 8 Testing data released

- July 13 Our competition is featured on CodaLab!

- June 23 Evaluation servers are open for submission

- June 22 The deadline for abstract submission to the TASK-CV workshop has been extended

- June 19 Training data, validation data and DevKits released

Winners of VisDA-2017 challenge

| # | Team Name | Affiliation | Score |

|---|---|---|---|

| 1 | GF_ColourLab_UEA | University of East Anglia, Colour Lab [arxiv] [code] | 92.8 |

| 2 | NLE_DA | NAVER LABS Europe | 87.7 |

| 3 | BUPT_OVERFIT | Beijing University of Posts and Telecommunications | 85.4 |

| # | Team Name | Affiliation | Score |

|---|---|---|---|

| 1 | RTZH [pdf] | Microsoft Research Asia | 47.5 |

| 2 | _piotr_ | University of Oxford, Active Vision Laboratory | 44.7 |

| 3 | whung | University of California, Merced, Vision and Learning Lab | 42.4 |

Thank you to those who attended TASK-CV and made it a success! Below please find the VisDA challenge presentations from workshop organizers and from the top performing teams in each track.

- VisDA organizers presentation

- Classification track runner-up presentation

- Classification track winner presentation

- Segmentation track honorable mention presentation

- Segmentation track winner presentation

Overview

We are pleased to announce the 2017 Visual Domain Adaptation (VisDA2017) Challenge! It is well known that the success of machine learning methods on visual recognition tasks is highly dependent on access to large labeled datasets. Unfortunately, performance often drops significantly when the model is presented with data from a new deployment domain which it did not see in training, a problem known as dataset shift. The VisDA challenge aims to test domain adaptation methods’ ability to transfer source knowledge and adapt it to novel target domains.

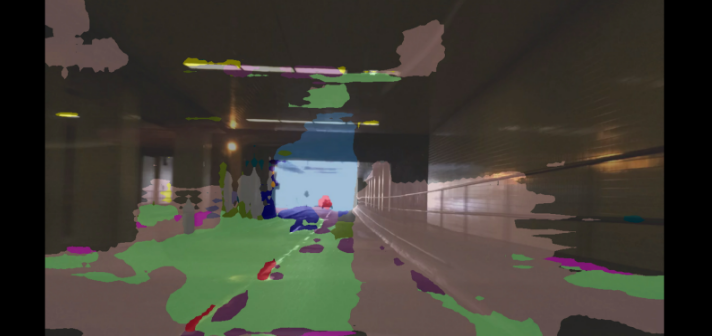

Caption: An example of a deep learning model failing to properly segment the scene into semantic categories such as road (green), building (gray), etc., because the input image looks different from its training data. Unsupervised Domain Adaptation methods aim to use labeled samples from the train domain and large volumes of unlabeled samples from the test domain to reduce a prediction error on the test domain. [1]

The competition will take place during the months of June -- September 2017, and the top performing teams will be invited to present their results at the TASK-CV workshop at ICCV 2017 in Venice, Italy. This year’s challenge focuses on synthetic-to-real visual domain shifts and includes two tracks:

Participants are welcome to enter in one or both tracks.

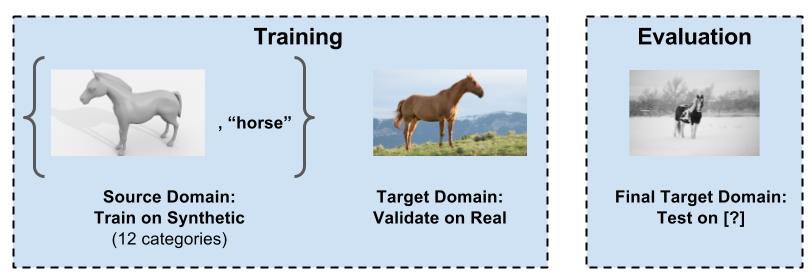

Classification Track

In this challenge, the goal is to develop a method of unsupervised domain adaptation for image classification. Participants will be given three datasets, each containing the same object categories:

- training domain (source): synthetic 2D renderings of 3D models generated from different angles and with different lighting conditions

- validation domain (target): a photo-realistic or real-image validation domain that participants can use to evaluate performance of their domain adaptation methods

- test domain (target): a new real-image test domain, different from the validation domain and without labels . The test set will be released shortly before the end of the competition

The reason for using different target domains for validation and test is to evaluate the performance of proposed models as an out-of-the-box domain adaptation tool. This setting more closely mimics realistic deployment scenarios where the target domain is unknown at training time and discourages algorithms that are designed to handle a particular target domain.

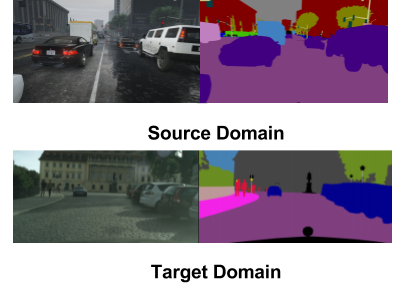

Segmentation Track

In this challenge, the goal is to develop an algorithm that can adapt between synthetic dashcam views and real dashcam footage for the semantic image segmentation task. The training data will include pixel-level semantic annotations for 19 classes. We will also provide validation and testing data, following same protocol:

- training domain (source): synthetic dashcam renderings along with semantic segmentation labels for 19 classes

- validation domain (target): a real world collection of dashcam images along with semantic segmentation labels for the corresponding 19 classes to be used for validating the unsupervised semantic segmentation performance

- test domain (target): a collection of real world dashcam images, different from the validation domain and without labels. The test set will be released shortly before the end of the competition

Prizes

We are excited to announce prizes for the top performing teams! The winners in both the classification and segmentation tracks will receive:

- 1st place: $2,000

- 2nd place: $500

- 3rd place: $250

Browse Data

If you would like to browse sample images from each of the datasets currently available please use the links below:

Classification challenge:

Segmentation challenge:

Download

Please follow the instructions outlined in the VisDA GitHub repository to download data and development kits for the classification and segmentation tracks. Training, validation and testing data are now available to download. We have also included baseline models and instructions on training several existing domain adaptation methods.

The DevKits are currently in beta-release. If you find any bugs, please open an issue in our GitHub repo rather than emailing the organizers directly.

You can get the tech report describing dataset and baseline experiments from arxiv. If you use data, code or its derivatives, please consider citing:

@misc{visda2017,

Author = {Xingchao Peng and Ben Usman and Neela Kaushik and Judy Hoffman and Dequan Wang and Kate Saenko},

Title = {VisDA: The Visual Domain Adaptation Challenge},

Year = {2017},

Eprint = {arXiv:1710.06924},

}Evaluation

We will use CodaLab to evaluate submissions and maintain a leaderboard. To register for the evaluation server, please create an account on CodaLab and and enter as a participant in one of the following competitions:

If you are working as a team, please register using one account for your team and indicate the names of all of the members on your team. This can be modified in the “User Settings” tab. See the challenge rules for more details.

Please refer to the instructions in the DevKit ReadMe file for specific details on submission formatting and evaluation for the classification and segmentation challenges.

Rules

The VisDA challenge tests adaptation and model transfer, so the rules are different than most challenges. Please read them carefully.

Supervised Training: Teams may only submit test results of models trained on the source domain data and optionally pre-trained on ImageNet. Training on the validation dataset is not allowed for test submissions. Note, this may be different from other challenges. In this year’s VisDA challenge, the goal is to test how well models can adapt from synthetic to real data. Therefore, training on the validation domain is not allowed. To ensure equal comparison, we also do not allow any other external training data, modifying the provided training dataset, or any form of manual data labeling.

Pretraining on ImageNet: If pre-training on the ImageNet ILSVRC classification training data, only the weights can be transferred, not the actual classifiers for specific objects, i.e. participants should not manually exploit correspondences between ImageNet output labels and labels in the data. Please indicate in your method description which pre-trained weights were used for initialization of the model. Teams who place in the top of the “no ImageNet pretraining” track will receive special recognition.

Unsupervised training: Models can be adapted (trained) on the test data in an unsupervised way, i.e. without labels. Adaptation, even unsupervised, on the validation data is not allowed for test submissions. Note, we have released the validation labels to facilitate algorithm development.

Source Models: The performance of a domain adaptation algorithm greatly depends on the baseline performance of the model trained only on source data. We ask that teams submit two sets of results: 1) predictions obtained only with the source-trained model, and 2) predictions obtained with the adapted model. See the development kit for submission formatting details.

Leaderboard:The main leaderboard for each competition track will show results of adapted models and will be used to determine the final team ranks. The expanded leaderboard will additionally show the team's source-only models, i.e. those trained only on the source domain without any adaptation. These results are useful for estimating how much the method improves upon its source-only model, but will not be used to determine team ranks.

FAQ

- Can we train models on data other than the source domain?

- Do we have to use the provided baseline models?

- How many submissions can each team submit per competition track?

- Can multiple teams enter from the same research group?

-

Yes, so long as each team is comprised of different members.

- Can external data be used?

- Are challenge participants required to reveal all details of their methods?

- Do participants need to adhere to TASK-CV abstract submission deadlines to participate in the challenge?

- When is the final submission deadline?

Participants may elect to pre-train their models only on ImageNet. Please refer to the challenge evaluation instructions found in the DevKit for more details.

No, these are provided for your convenience and are optional.

For the validation domain, the number of submissions per team is limited to 20 upload per day and there are no restrictions on total number of submissions. For the test domain, the number of submissions per team is limited to 1 upload per day and 20 uploads in total. Only one account per team must be used to submit results. Do not create multiple accounts for a single project to circumvent this limit, as this will result in disqualification.

The allowed training data consists of the VisDA 2017 Training set. The VisDA 2017 Validation set can be used to test adaptation to a target domain offline, but cannot be used to train the final submitted model (with or without labels). Optional initialization of models with weights pre-trained on ImageNet is allowed and must be declared in the submission. Please see the challenge rules for more details.

Participants are encouraged to include a brief write-up regarding their methods when submitting their results. However, this is not mandatory.

Submission of a TASK-CV workshop abstract is not required to participate in the challenge. The top-performing teams will be invited to present their approaches at the workshop, even if they did not submit an abstract.

All results must be submitted by September 29th at 11:59pm ET. On CodaLab, this is equivalent to September 30th at 3:59am UTC.

Workshop

The challenge is associated with the 4th annual TASK-CV workshop, being held at ICCV 2017 in Venice, Italy. Challenge participants are invited to submit abstracts to the workshop, but this is not required for challenge participation. If you wish to submit an abstract, please see the TASK-CV website for deadlines. The top performing teams will be invited to give a talk about their results at the workshop during a special session.

Organizers

Kate Saenko (Boston University), Ben Usman (Boston University), Xingchao Peng (Boston University), Neela Kaushik (Boston University), Judy Hoffman (Stanford University), Dequan Wang (UC Berkeley)Sponsors